An Introduction to Large Language Models: What You Need to Know

- Author: Princee Rithikka

- Posted On: June 14, 2024

- Post Comments: 0

| Getting your Trinity Audio player ready... |

Table of Contents

ToggleAn Introduction to Large Language Models: What You Need to Know

AI has advanced significantly, especially with the development of large language models (LLMs) in recent years. These models, like OpenAI’s GPT-4 and Google’s BERT, are changing the way we use technology. Knowing what LLMs are and how they work is crucial for anyone curious about AI’s future and its use in different industries.

In this blog, we will explore what LLMs are and how they work.

What are Large Language Models?

Large language models are advanced AI systems designed to understand and generate human language. They learn from extensive datasets, which helps them perform tasks like text generation, translation, and summarization with great accuracy. Examples of well-known LLMs include GPT-4 and BERT. GPT-4 excels at generating coherent and relevant text, while BERT has a superior understanding of language context. You can converse with these models, ask them questions, have them write poems, create images, and even translate text.

Types of Large Language Models

Some widely known types of large language models include:

GPT Series (GPT-3, GPT-4)

The GPT series from OpenAI is well-known for its ability to generate text. GPT-3 and GPT-4 can create clear and relevant text that fits the context. They are extensively used in chatbots, content creation, and various other applications.

BERT

Google’s BERT (Bidirectional Encoder Representations from Transformers) aims to understand language context. Unlike GPT, BERT specifically interprets text, making it perfect for tasks such as question-answering and sentiment analysis.

Other LLMs (T5, XLNet)

Other important models in the field are T5 (Text-To-Text Transfer Transformer) and XLNet. T5 handles each NLP task as a text-to-text challenge, whereas XLNet enhances BERT by dynamically considering word contexts.

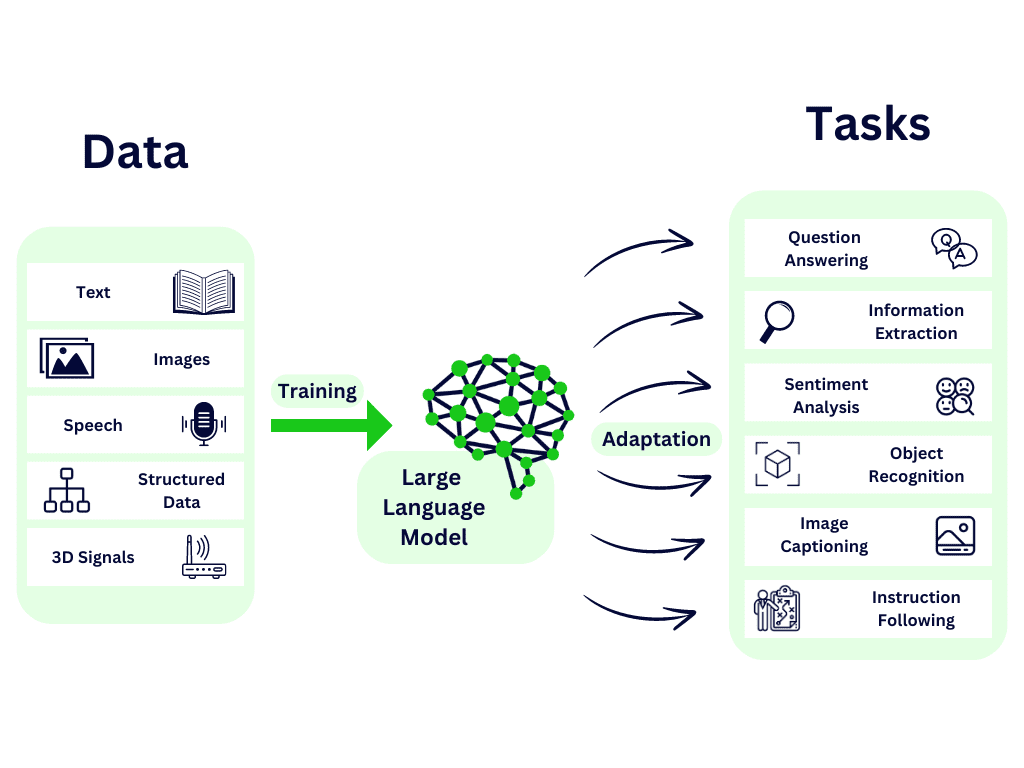

How Do Large Language Models Work?

Large Language Models (LLMs) such as ChatGPT analyze large volumes of text to understand language patterns and relationships. Think of them as knowledgeable friends who read extensively and absorb information from books, articles, and websites. Similarly, LLMs learn from a vast collection of online text.

For instance, if you ask ChatGPT, “What is a cat?” it uses its vast knowledge to know that a cat is a small, furry animal often kept as a pet. LLMs also know that cats can meow, purr, and hunt mice. They excel at generating responses based on what they’ve learned from the text they’ve read.

LLMs use machine learning techniques to continually improve their understanding and ability to generate text that resembles human-like text. While they don’t have human-like comprehension or consciousness, they are adept at identifying language patterns and creating coherent responses.

Neural Networks and Deep Learning

Training LLMs involves using deep learning techniques, especially neural networks. These models analyze large volumes of text data, understanding language nuances by identifying patterns and structures.

The Transformer Architecture

Their architecture uses a transformer to handle long-distance connections and context effectively. Transformers use self-attention to decide how important each word is in a sentence, making it better at understanding and creating text.

Training

LLMs receive rigorous training using varied and extensive datasets. During this training, they analyze text, grasp grammar, and interpret context. After training, they can be adjusted for specific jobs, boosting their ability in tasks like sentiment analysis and answering questions.

Fine-Tuning

After initially training, developers often fine-tune LLMs on specific datasets relevant to particular tasks. This fine-tuning enhances their accuracy and efficiency in those tasks, making them more versatile and adaptable to various applications.

Benefits of LLMs

Improved Language Understanding

LLMs can perform tasks such as translation, summarization, and question-answering accurately by understanding language context and nuances. This ability makes them valuable tools across various industries.

Versatility in Applications

Large Language Models are very versatile and can be used in many ways. They can automate routine tasks and provide personalized user experiences.

Enhanced Text Generation

They are highly effective at creating human-like text. This makes them perfect for uses such as chatbots, automated content creation, and more. They can generate text that makes sense, stays on topic, and keeps readers interested.

Challenges and Limitations of LLMs

Large language models face several challenges. One big issue is their tendency to generate responses that could be incorrect or biased due to their training data. This can spread misinformation or reinforce biases.

Additionally, these models require a lot of computational resources and energy. This makes them hard for many researchers and organizations to use. There are also privacy concerns because they might store sensitive information from their training data.

Lastly, these models struggle with keeping context and coherence in longer conversations or complex topics. This often results in responses that don’t make sense or aren’t relevant. These challenges show why it’s important to use these models carefully and keep researching to fix these problems.

How to reduce those challenges and limitations?

To address the challenges of large language models, you can use several strategies. First, ensure training data is diverse and representative to reduce bias and improve accuracy. Update and fine-tune models regularly with new data to keep them relevant and correct errors. Protect sensitive information by implementing strong privacy measures like differential privacy. Optimize algorithms to be more efficient, reducing the computational resources and energy needed. Develop better methods to handle context and coherence in conversations to improve response quality and relevance. These steps are essential for creating more reliable and responsible language models.

Future Trends in Large Language Models

Advances in Model Architecture

Researchers continually develop advanced model architectures to boost efficiency, accuracy, and versatility. Innovations in neural networks and transformer models will drive this progress forward.

Increasing Accessibility and Efficiency

Developers aim to make LLMs more accessible and efficient. These efforts ensure a wider range of businesses and individuals can use them. They also focus on creating lighter models that need fewer resources to operate.

Integration with Other AI Technologies

LLMs now integrate more with other AI technologies, like computer vision and robotics. This integration creates more powerful and comprehensive AI systems, expanding their capabilities and applications.

Conclusion

Large language models are a big leap in artificial intelligence. They offer many benefits and can be used in various industries. These models have challenges, but their potential for innovation and efficiency makes them valuable for businesses. As we develop and improve these models, they will have a greater impact on our daily lives and work. Embrace the future of AI with our expert Large Language Model Development services and stay ahead in the tech race.

FAQ

A Large Language Model (LLM) is a type of artificial intelligence trained on massive amounts of text data to understand, interpret, and generate human-like language. It uses deep learning techniques to predict the next word in a sentence, answer questions, write content, and assist with complex language tasks.

LLMs use neural networks – especially transformer architectures – to learn patterns in language. By training on billions of words, they learn grammar, context, style, and meaning. When given a prompt, they generate responses by predicting the most likely next words based on their training.

Examples include generative models like GPT-4, BLOOM, LLaMA, and other transformer-based systems. These models vary in size and capability but share the ability to understand and generate coherent text.

LLMs are versatile and can be used for:

Content writing and summarization

Language translation

Text classification and sentiment analysis

Interview and chat automation

Code generation

Data extraction and analysis

They support tasks across business, education, marketing, customer support, and research.

Large language models don’t “understand” text like a human but learn statistical relationships between words and phrases. They analyze patterns from training data and use attention mechanisms to focus on relevant context when generating responses.

Search

Categories

- AI and ML Development (1)

- App development (2)

- App Maintenance (1)

- App Marketing (2)

- Artificial Intelligence (19)

- Big Data & Data Science (1)

- Blockchain (1)

- ChatGPT Development (2)

- Cost Estimation (1)

- Digital Signage (1)

- Generative ai (1)

- Internet of Things (IoT) (4)

- Machine Learning (8)

- Mobile App Development (4)

- Object Recognition (1)

- Smart TV (2)

- Software Development (3)

- Web Development (1)

Tag Cloud

Recent Posts

- Generative AI Development Company: 10X Business Productivity with Custom AI Solutions

- Top Mobile App Development Companies in Kuwait to Hire in 2026

- Top AI and ML Development Company in 2026

- Top Artificial Intelligence Development Company to Transform Your Business in 2026

- Top 10 Machine Learning Development Services Every Enterprise Needs in 2026

Recent Comments